With the basics of Smart Cloud Analytics Log Analysis (SCALA) in place from scenario1, I’ll begin to share additional scenarios that place emphasis on getting system and application log data into the tool for processing.

With both OpenBeta milestone 1 and 2 drivers, the main emphasis has been on log acquisition based upon what’s called “batch mode”. Basically, there’s a local copy of Tivoli’s Log File Agent (LFA) installed on the SCALA box and it’s monitoring three directories on the local server in the /logsources/* path. Anytime a file is placed within one of the three monitored directories, the LFA begins to process it and post messages against the SCALA EIF Receiver for ingestion into the tool.

In scenario1, this was extended this to show how the included LFA could be exported from the local installation and installed on a remote system to “stream” logs from the remote system’s installed WebSphere Liberty based application to the SCALA system. If you’re an existing Tivoli Log File Agent customer then you may already have a foundation in place for streaming logs in from all of your remote systems. If you’re not an existing LFA customer, you might be entitled to use of the LFA when purchasing this product.

In this blog post, I’ll introduce scenario2 which is a technique for local system and application log consolidation using rsyslog along with the LFA to ship logs off to a remote SCALA system. This technique with demonstrate creation of a consolidated logfile of standard CentOS system logs (/var/log/*), local application logs (SCALA, WebSphere Liberty, etc.) using an optimized key value pair (KVP) format to make best use of the SCALA Generic Annotation feature.

Syslog has been around for decades and has been used as the defacto best practice for standardized log collection, shipping and consolidation by companies worldwide. There are a number of productized implementations of the syslog standards and nearly every operating system has aligned with at least one of them as part of their standard package. In our case, CentOS 6.4 has included rsyslog. Other popular packages include the original syslogd and syslog-ng. Whichever package your operating system provides should allow for similar techniques as the ones I’ll be showing using rsyslog.

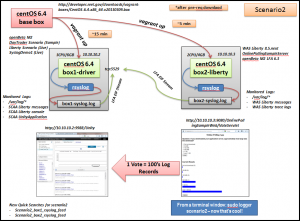

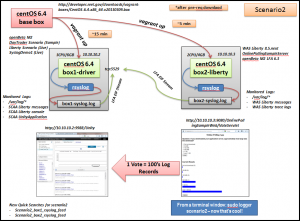

Architectural Overview

The following picture represents the architectural elements of scenario2. This is something that you can easily install and try out on a modern laptop (I use a Thinkpad W510 with Intel i7 (4 cores) and 16Gb memory). After you’ve downloaded the OpenBeta M2 driver, this can be up and running in about 30 minutes.

Key Changes from Scenario1

Here are the key changes from scenario1. The configurations are kept in place to allow scenario1 to continue to function.

- On box1-driver, add rsyslog based collection of all /var/log/* files

- On box1-driver, add rsyslog based collection of SCALA’s WebSphere Liberty server (messages and console logs) and SCALA UnityApplication logs

- On box1-driver, use local LFA to ship rsyslog generated box1-syslog.log aggregate logfile placed in /logsources/GAContentPack directory

- On box2-liberty, add rsyslog based collection of all /var/log/* files

- On box2-liberty, add rsyslog based collection of WebSphere Liberty server message and trace logs

- On box2-liberty, use local LFA to ship rsyslog generated box2-syslog.log aggregate logfile to SCALA

Using rsyslog with SCALA

I’d like to spend a little bit of time talking about how I’m using CentOS 6.4’s included rsyslog. The version included is v5.8.10 which appears to be over one year old but more current versions are available here if desired.

The core of rsyslog is configured using the rsyslog.conf file found in the /etc directory. Within this file, a number of changes are made to specify what logs will be collected, how they will be processed or filtered and what will be done with them. Think of this as a simple input – process/filter – output flow for logs on the local system.

The first addition made to the default rsyslog.conf file is to add the Text File Input Module. This will allow us to take any standard text file with printable characters delimited by a line feed and convert each line into a syslog message that we can the process, filter and ship off someplace locally or to a remote location.

The next changes made are creation of a couple templates to specify the desired format of each syslog message. The default rsyslog format isn’t ideal for use with SCALA so I’ve created a key value pair (KVP) and comma separated value (csv) format that demonstrate the usefulness of the rsyslog template option. I’m using the KVP template in scenario2 as it will allow us to use the SCALA Generic Annotator and immediately gain some powerful discovered patterns for searching logs in the tool.

#kvp format

$template scaa-syslog-demo-kvp,"%$year% %timestamp:::date-rfc3399%,hostname=%hostname%,relayhost=%fromhost%,tag=%syslogtag%,message=%msg%,facility=%syslogfacility-text%,severity=%syslogseverity-text%,processID=%procid%,priority=%pri%\n"

The available fields are described here.

I’ve left all of the standard /var/log/* file rules in place and added in specific files for each box that we’ll collect with rsyslog. As an example, this is the configuration added to collect the SCALA /logs/UnityApplication.log file.

# Watch /opt/scla/driver/logs/UnityApplication.log

$InputFileName /opt/scla/driver/logs/UnityApplication.log

$InputFileTag SCAA-UnityApplication:

$InputFileStateFile state-SCAA-UnityApplication

$InputRunFileMonitor

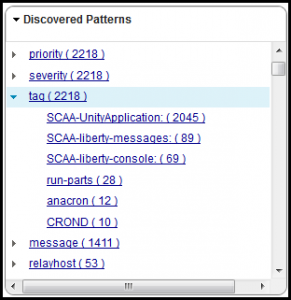

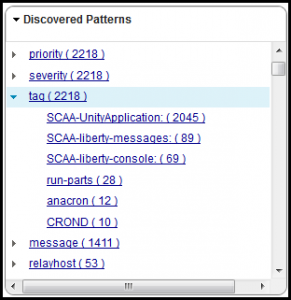

Note the very powerful $InputFileTag option. This is used to give every logfile we’re monitoring a unique name within the syslog message flow. We can easily turn this into a KVP is used within SCALA Discovered Patterns for directed search and filtering of logs.

The final changes I’ve made are to use the actions and file output module to create a single, consolidated logfile made up of all of the /var/log/* and specified application logs on the local system. The file will be created in a specified location, with the proper file ownership permissions and make use of the previously defined KVP template to structure the log messages.

Here’s an example:

# This will take all default and custom monitored files, write them to the

# consolidated file using the custom template we defined above in KVP format for SCALA GA

$FileOwner scla

$FileGroup scla

*.* /opt/scla/driver/logsources/GAContentPack/box1-syslog.log;scaa-syslog-demo-kvp

Any changes made to the /etc/rsyslong.conf file must be made using root or sudo. Once changes have been made, you can issue the service rsyslog restart command to have the changes activated.

Shipping to SCALA with the LFA

We’re using the same LFA’s installed on each box from scenario1” target=”_blank”>scenario1 with a simple addition to the LFA configuration file to pick up the new rsyslog generated consolidated logfile. For box1-driver we just drop the rsyslog generated logfile into the /logsources/GAContentPack directory and it’s processed by the LFA automatically with no changes to the LFA configuration needed. For box2-liberty, we simply append the new logfile to the end of the LFA configuration line as shown:

LogSources=/opt/scla/wlp/usr/servers/OnlinePollingSampleServer/logs/messages.log,/opt/scla/wlp/usr/servers/OnlinePollingSampleServer/logs/trace.log,/opt/scla/scenario2/box2-syslog.log

The required configurations are pushed into SCALA to define the new log sources so everything is ready for logs to stream into SCALA.

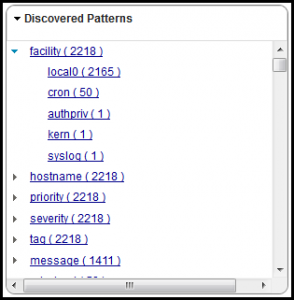

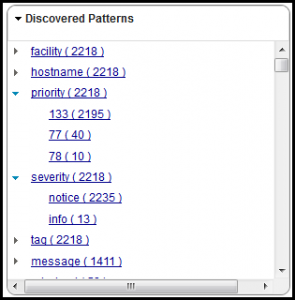

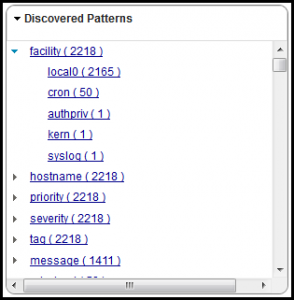

Exploring Logs from rsyslog using SCALA Search and Discovered Patterns

The purpose of using the SCALA Generic Annotator is to allow you an easy pathway to get just about any log record format into SCALA. Refer to lower portion of this scenario1 blog posting or the manuals here for a bit more information on the Generic Annotator. What I’ve done with scenario2 is to just help things out a bit more by seeding the log with a KVP format so we get structured key fields in the Discovered Patterns panel automatically. They wouldn’t be there if we were to use the default rsyslog message format.

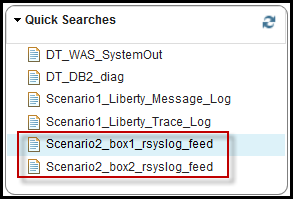

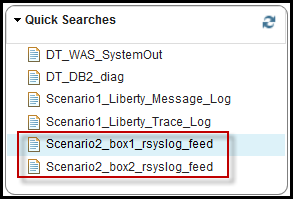

For scenario2, the following two Quick Searches have been added to SCALA. There’s one for the consolidated rsyslog stream from each box. There will be duplicate messages in the system as I’ve kept the same application logs flowing in from scenario1 as well. You can simply click on the Quick Search or select the log sources to search across logs as they come in from each scenario as a way to compare/contrast each approach.

If you let it run for a while, make some changes within SCALA or cast some votes with the OnlinePollingSample app on box2 you’ll generate a ton logs.

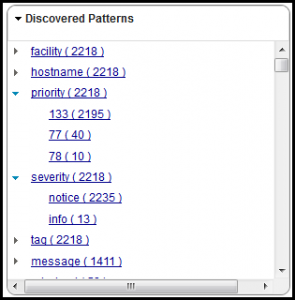

Here are a few examples of the Discovered Patterns panels available after clicking on the Scenario2_box1-rsyslog_feed. Note the key fields and value fields parsed from the logs using the Generic Annotator.

How to get started

1. Sign up for the SCALA Open Beta here.

2. Install Virtualbox (tested with 4.2.12 on Win7)

3. Install Vagrant (tested with 1.2.2 on Win7)

4. Download the git repo using either git clone https://github.com/dougmcclure/ILA-Open-Beta.git or download the repo as a .zip file and unzip

5. Download SCAA OpenBeta Driver 2 and place in the /shared/box1-files directory of the repo

6. Open a terminal or Windows command shell and navigate to the box1 repo directory. Issue the vagrant up command.

7. Open a new terminal or Windows command shell and navigate to the box2 repo directory. Issue the vagrant up command.

Point Firefox 17 to http://10.10.10.2:9988/Unity, log in with unityadmin/unityadmin and follow the blogs here for more information.

Scenario3 Preview

For scenario3, we’ll shift away from the use of the LFA on the edge systems to using rsyslog to not only collect logs from the local system but to also ship them off to a centralized rsyslog server. At the centralized rsyslog server, we’ll consolidate all of the logs and process them into dynamically created files by source as well as create one consolidated log from all of the edge systems that we can drop in for Generic Annotator processing via the LFA.